Hi

Hope you had excellent weekends and that the week ahead is shaping up well for you all. A few headlines from the world of AI to kickstart your week:

The battle for AI ownership: OpenAI’s News Corp Deal and just who owns Scarlett Johansson’s voice?

Big moves on the question of copyright and ownership in the last week. OpenAI and News Corp have inked a historic agreement, giving OpenAI access to current and archived content from publications like The Wall Street Journal, the New York Post, The Sun, etc. Massive implications on a great number of fronts – think Perplexity summaries but for your news (and in all seriousness, will we check the original sources each and every time? Probably not) but seriously, who picks the mix/preference order by which angles on a given topic are taken? Can the weights of sources be tweaked to user preference – if so, bye-bye Overton Window 👋 And just what does this mean for journalism as a field?

Oh and did you hear about Scarlett Johannson vs Sam Altman? Long story short – she alleges Sam Altman and co tried stealing her voice for their new GPT4o model. In an extremely meta take, you can hear her statement read by the “Sky” OpenAI voice here.

If it’s not an outright clone, it’s eerily similar/way too close to the original – which OpenAI have acknowledged by taking the voice down. What does this mean? There are suggestions this shines a useful light on the dark heart of generative AI – a technology that is: built on theft; rationalised by three coats of prime legalistic posturing about “fair use”; and justified by a worldview which says that the hypothetical “superintelligence” tech companies are building is too big, too world-changing, too important for mundane concerns such as copyright and attribution. Interesting times indeed.

Microsoft and Apple’s AI showdown: from Copolot+ to WWDC2023

So it’s clearly conference/announcement season – not content to let OpenAI and Google have all the fun, Microsoft Build happened last week too. In it, Microsoft doubled down on infusing AI across its tech stack – from Copilot+ PCs supercharged by OpenAI’s latest language models to new Azure AI cloud services and tools. Be interesting to see how these play out – and also how Apple responds at their Worldwide Developer conference in a couple weeks time. Watch a recap of Microsoft Build below.

However, one announcement that raises privacy concerns is Windows 11’s “Recall” feature. Using dedicated AI chips, Recall constantly records and locally stores snapshots of your PC screen activities to enable searchable histories, despite Microsoft’s assurances about encrypting the data and allowing user controls. While convenient for finding information across apps and browsing, the level of always-on monitoring required for Recall represents a significant tradeoff that could make many uncomfortable without robust security guarantees.

Does A.I. know what I’m thinking?🤔 AI’s Theory of Mind and the Race for Regulation

A super-interesting question in the wake of the departure of Ilya Sutskever (OpenAI’s Chief Scientist) and Jan Leike (OpenAI’s Head of Superalignment – with the latter stating that, over the past years “safety culture and processes have taken a back seat of shiny products”): does AI have a theory of mind? i.e., can it understand others’ mental states?

A fascinating new paper suggests GPT-4 performs at a human level in identifying indirect, false beliefs, and misdirection – with weak spots coming from system prompts rather than the model itself.

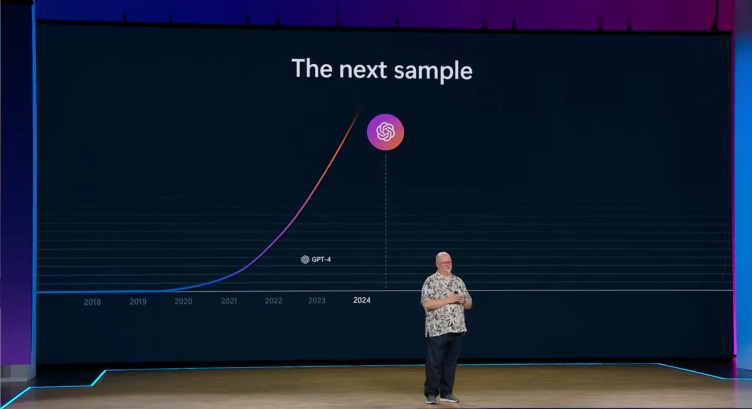

There has been immediate pushback on this, with experts asking why this even matters. Fair point but, just speaking for myself, when I hear Microsoft’s CTO talking about how AI abilities are going to keep scaling exponentially for some time to come – hear more about sharks, killer whales, and just how mind-bendingly enormous/powerful the next generation of AI will be here – you have to wonder what emergent properties will come with all that compute?

This is important context to the AI Seoul Summit 2024 and warnings that we are not moving rapidly enough to address this problem. Against this, I love the work that Anthropic (the creators of Claude) are doing in the alignment space as well as the fascinating things they’re doing with trying to peer into the black box of production model LLMs. More of this!

PwC’s 2024 AI Jobs Barometer: Productivity, Growth, and Navigating the AI Career Landscape

PwC have released their 2024 AI Jobs Barometer. Headlines? Sectors with high AI exposure are seeing nearly 5 times higher productivity growth compared to less AI-exposed sectors. In the UK, AI job postings are growing 3.6 times faster than overall listings, with a 14% wage premium for AI roles, indicating AI is supplementing rather than replacing workers. While currently concentrated in few sectors, as AI improves and spreads further, its potential to transform industries, jobs, and drive productivity growth could be much greater.

This aligns largely with the Microsoft/LinkedIn 2024 Trend Index Annual Report that we covered in an earlier edition of this newsletter (which is definitely worth a look if you haven’t already) but what the question is – well, what do you do? Cue Allie K Miller with tips for people at all levels in How to Accelerate Your Career in the AI Age:

Entry-level? Make your resume AI-competitive by getting certified and experimenting with tools like ChatGPT. Individual contributor? Become an “AI power user” by building AI-first habits and rethinking how work gets done. Manager? Empower your team’s AI potential with enabling policies and incentives for adoption. Executive? Operationalise AI across your organisation with a bold vision and strategy for ethical, innovation-driving implementation. No matter your role, proactively levelling up your AI skills is a career accelerant in the new age of work.

AI in Education – the podcast

Need a good podcast on the subject of AI x education? Check out the AI in Education podcast by Dan Bowen 🎸 and Ray Fleming – some great discussions on the topic with excellent guests: Jason Lodge Guiding Through Assessments, Adam Bridgman and Danny Liu on the University of Sydney and the future of assessment, News and Research Roundups – definitely worth a listen!

We hope this edition of the newsletter has been of interest to you. If you’re new here and it’s been useful, please do click subscribe and you can expect a weekly update every Monday from now on. If you’re already a subscriber – thanks for your ongoing interest and support! Either way, if you know others who might benefit from reading this weekly, please forward it on to them too.

Have a great week ahead and let us know if there’s anything we’re missing that we should add to make this newsletter more useful for i) yourself and/or ii) others. This is a fast-moving, ever-evolving space and we greatly value any and all feedback. 🙏