Hi

Hope you had wonderful weekends and that the week is off to an excellent start! It’s steadily getting hotter here in Hanoi – not yet breaking that 40°C/104°F and humid RealFeel but we’re getting there. A few headlines from the world of AI to kickstart your week under my air-con:

Meet Claude 3.5 Sonnet: Raising the Bar in AI Language Models

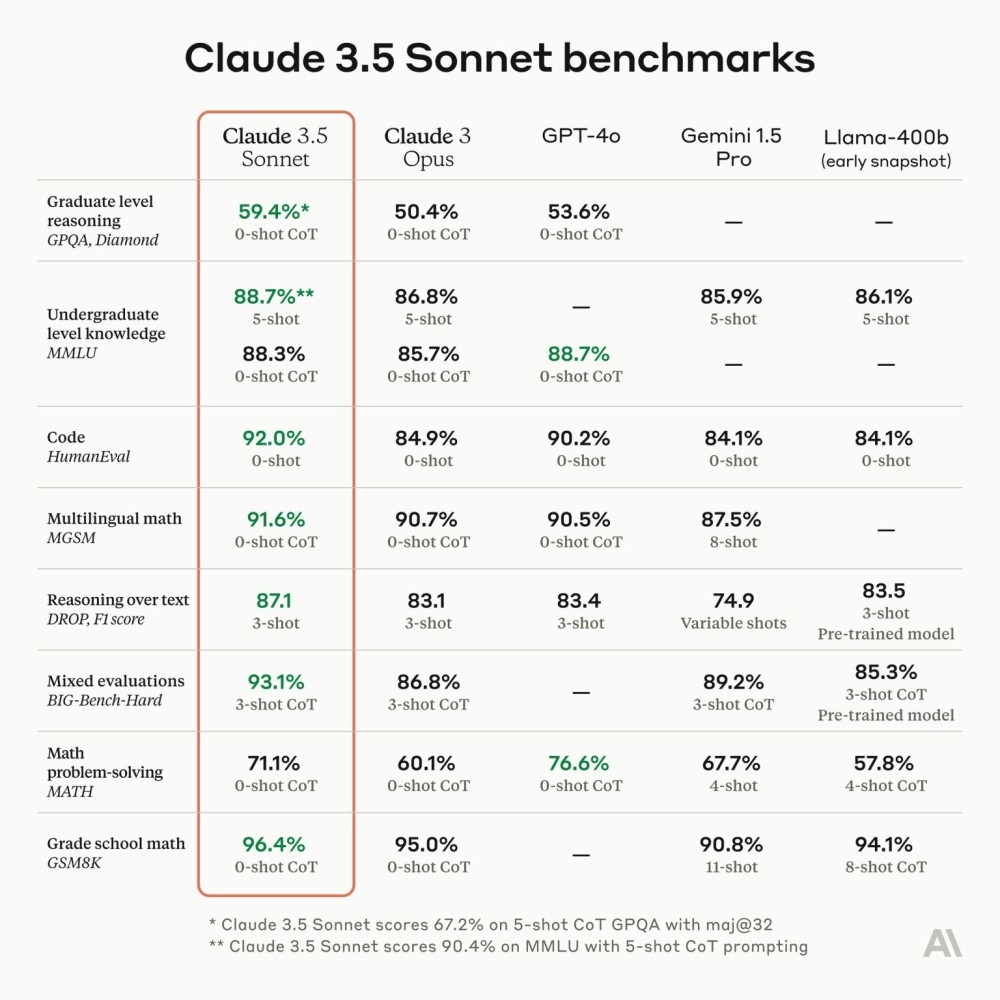

There’s a new king of the AI castle. Anthropic, long a favourite of writers and content developers, have released Claude 3.5 Sonnet and it’s impressive – beating out OpenAI’s GPT4o, Google’s Gemini 1.5, and Llama 3 (see below) by a decent margin in some cases.

Claude 3.5 Sonnet > Claude 3, GPT4o, Gemini, and Llama-3

Given Claude models come in “families” with Sonnet being the smallest, slowest, and “dumbest” it will be very interesting to see what happens when they release the Haiku and Opus models. 👀 🍿

Other interesting things? Well apparently the vision and ability to interpret images, charts, and diagrams is a lot better but the best part are the new Artifacts. Kind’ve like a preview window, Artifacts are a dynamic workspace where you can see, edit, and iterate Claude creations in real-time – very cool. So if you’ve been waiting for a sign to try an AI other than ChatGPT, I’d say this is your overdue invitation….

As an aside, have you ever seen those AI aptitude metrics (the image above) and wondered what they mean? Three in particular stand out in the HE context

- Graduate level reasoning (GPQA, Diamond): This measures the model’s ability to perform tasks at a graduate-level – think of it like the research aptitude part of the model.

- Undergraduate level knowledge (MMLU): This tests the model’s grasp of undergraduate-level knowledge across a broad range of subjects – think of this as a measure of UG students and their knowledge.

- Mixed evaluations (BIG-Bench-Hard): This is the fun one. Let’s say I tell Claude a joke “A man walks into a bar. Ouch.” This metric measures it’s ability to holistically pick up on the punchline and respond in a coherent fashion – e.g., “Ah yes, the classic bar-to-the-face manouver. A bold move, but not always the wisest choice for an entrance.” 😂

Runway’s Gen-3 Alpha: the Future of AI Video Generation and beyond

Another week another video model changing the game. Runway have released their new Gen-3 Alpha model. This latest iteration represents a major leap forward in terms of fidelity, consistency, and control over the video output. Gen-3 Alpha was trained jointly on both videos and images, enabling it to generate highly photorealistic human characters and scenes with a impressive level of cinematic detail and fluidity of motion. Video of all the releases today but a particular shoutout to the light patterns and refractions in “subtle reflection of a woman on the window a train moving at hyper-speed in a Japanese city” or the ever-changing background detail in “an astronaut running through an alley in Rio de Janeiro”. Amazing stuff!

But Nick, you might ask – why do you keep banging on about video generators? They’re awesome but most of us don’t make videos. Fair question and admittedly a big chunk of it has to do with deepfakes, politics, and other things – case in point: the below picture of Biden and Trump enjoying ice creams together on their bikes took me about 30 seconds to make with Midjourney.

#bestbuds

But then, Runway have gone on record as saying that video generators are just an intermediate step to creating something they call “general world models”. Essentially “an AI system that builds an internal representation of an environment, and uses it to simulate future events within that environment”, these are starting to pop up in a few places – you might have seen NVIDIA’s Earth 2 aka a digital twin of our planet – the potential for simulations and experiments are pretty exciting.

Oh and when you remember that Yann LeCun (Meta’s AI boss) has spoken about the need for AI to move beyond text into sensory data to keep developing further – world models offer a strong potential path.

Classroom AI: Victoria’s Rulebook for the Digital Age

As AI systems become more advanced and integrated, it’s good to know that both policy and the law are moving (albeit) slowly to meet the challenge – to try and ensure they remain trustworthy and aligned with ethical principles. The Vic Government recently released advice for school leaders and teachers around how to use AI tools in a safe an responsible way.

Some good points of data privacy, academic integrity, defamation, etc. but it was also interesting to see the following, “staff must also be directed to not use generative AI tools to communicate with students and parents in ways that undermine authentic learning relationships or replace the unique voice and professional judgement of teachers and school leaders. This includes not using generative AI tools to directly: communicate with parents or students; make judgements about student learning achievement or progress; or write student reports for parents or carers.” Suggests a clear concern about over-reliance on AI and a desire to preserve the unique role and professional judgment of teachers in areas like communicating with students/parents and assessing student learning. Great to see!

Cheating 2.0: Why AI Hasn’t Sparked an Academic Integrity Crisis

Worried about the death of the essay? Thinking students will just use AI to cheat? Interesting new research from the Stanford Graduate School of Education suggests the focus on AI chatbots and student cheating may be misplaced and that cheating hasn’t actually increased at all.

Denise Pope and Victor Lee Victor Lee argue that long-standing cheating issues are linked to deeper, systemic reasons students feel disconnected or lack purpose in their schoolwork – not just access to new technologies. They recommend that schools address these underlying causes, rather than simply trying to detect or ban the use of AI. Food for thought.

From Proteins to Pachyderms: AI’s Wild Ride Through Science

It’s a fun space the AI one but sometimes I am just struck dumb by the scale of it… take, for example, AlphaFold – the tool that is transforming our understanding of the molecular machinery of life. The “old” model, released in 2020, has already been used to predict hundreds of millions of protein structures – research that would have taken hundreds of millions of researcher-years at the current rate of experimental structural biology. Welp, they have a new model, it’s amazing and they’ve made elements of it freely available to the world in the hope “it will transform our understanding of the biological world and drug discovery”. Phenomenal, world-changing stuff.

Oh and did you know that elephants have names? How cool is that?!? 🤩😍🐘

We hope this edition of the newsletter has been of interest to you. If you’re new here and it’s been useful, please do click subscribe and you can expect a weekly update every Monday from now on. If you’re already a subscriber – thanks for your ongoing interest and support! Either way, if you know others who might benefit from reading this weekly, please forward it on to them too.

Have a great week ahead and let us know if there’s anything we’re missing that we should add to make this newsletter more useful for i) yourself and/or ii) others. This is a fast-moving, ever-evolving space and we greatly value any and all feedback. 🙏