Hi

Hope you had wonderful weekends wherever you might be. I’m counting down to 🇬🇧 summer travel very soon so did my level best to eat all the bun cha, pho, nem, and banh mi I could find 🤤 #goodtimes

Anyway, enough food talk! Here are a few key headlines from the world of AI to kickstart your weeks:

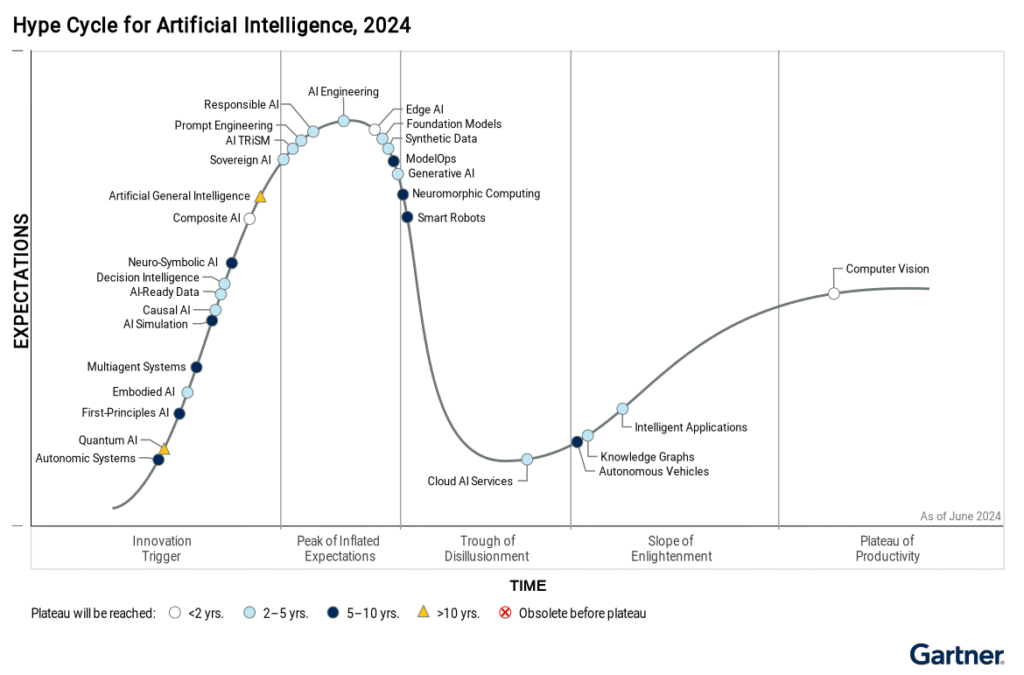

Gartner’s AI Hype Cycle: Navigating the Peaks and Valleys of AI Innovation

The Gartner Hype cycle maps the maturity, adoption, and social application of AI technologies – helping organisations understand which AI technologies are emerging, peaking in hype, and which sliding into “the trough of disillusionment”. While Generative AI has cooled off somewhat from it’s 2023 peaks of inflated expectations, it’s still driving innovation across the AI landscape (see pic).

The Gartner AI Hyper Cycle 2024

Gartner reports seeing a growing focus on composite AI approaches – think of it as the AI world’s greatest hits album, mashing up different techniques to tackle tougher problems. And, as AI starts muscling its way into more critical applications, there’s a justified and increasing buzz around governance, risk management, and safety. Keep your eyes peeled for AI engineering (robots improving robots – what could go wrong?!?) and the emergence of “sovereign AI” – nations wanting to keep their AI cards close to their chest. Interesting times ahead on the geopolitical front 😨

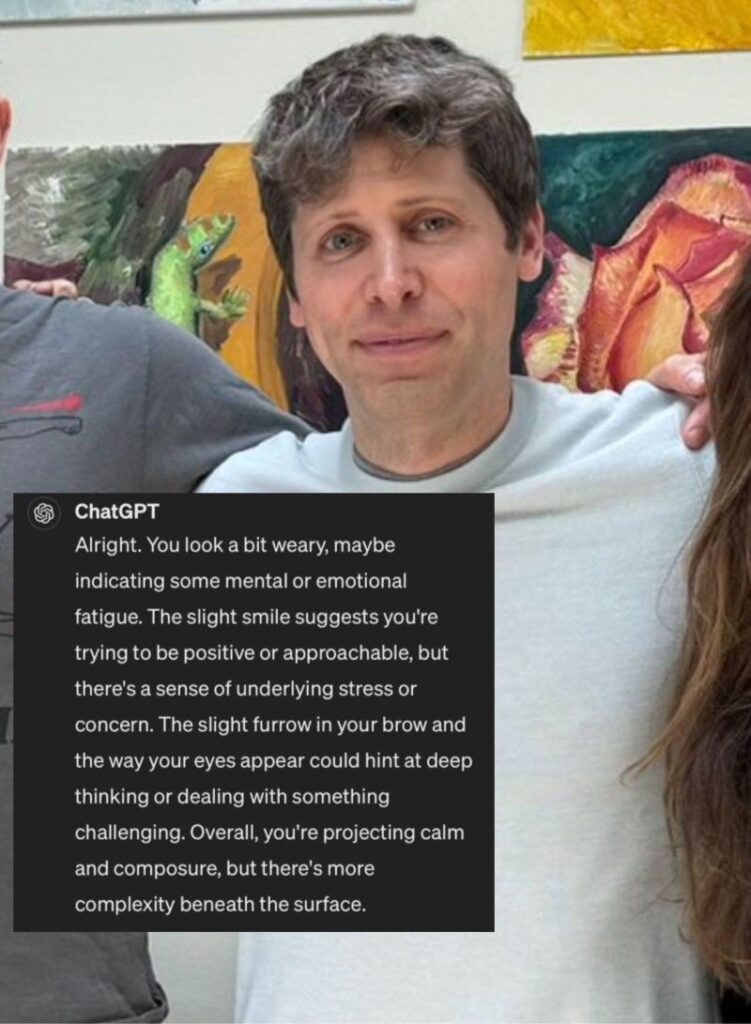

Otherwise, if talking hype, consider this salutory lesson about AI propensities to “hallucinate” (aka “lie confidently” aaka “bullshit’) in analysing a person’s pyschological state from a single picture (let’s call our volunteer “Sam A” – pic below)

You okay Sam A? You look tired…

And then in an awesome burn, even AI itself acknowledges there are huge problems with that approach (original thread here)

🔥🔥🔥

Global AI Regulations: A Fragmented Landscape

Great study conducting a comparative analysis of global AI regulations. Many countries are basing their regulations on the OECD AI principles focusing on human rights, transparency, safety, and accountability. However, they prioritise these principles differently and implement them in varied ways. This creates significant global divergence with some striking outliers. Case in point: China is the only jurisdiction to establish a licensing regime for certain AI applications, specifically for recommendation algorithms in a news context. This highlights China’s distinct regulatory approach, focusing on specific technologies rather than broad risk categories.

Many jurisdictions are adopting risk-based regulatory frameworks, with stricter rules for “high-risk” AI systems – only, the definition of “high-risk” varies between countries. Clearly there’s still room for international coordination. One area that might be particularly useful for alignment is around content watermarking for AI-generated outputs. Surprisingly only a few jurisdictions (China, EU, and US) require these – interesting given the growing concern about deepfakes and AI-generated misinformation. The fact that this isn’t a more widespread requirement stands out, especially considering its potential importance for transparency and accountability in AI-generated content. 🤔

Or perhaps they could start with subtler, more emotional applications – tugging at your heartstrings and pushing buttons. Remember Hume.ai (”the world’s first voice AI that responds empathically, built to align technology with human well-being)? We’ve covered this before but it is worth noting that AIs of this sort will soon be banned in European workplaces and educational settings (great breakdown from the ever-insightful Barry Scannell here), it is worth reflecting on other applications outside of those safe spaces. Imagine walking past a vending machine that knows your name, your buying history (and so your fondness for a Sprite or Coca Cola on a hot summer Tuesday afternoon) that can then use emotional recognition techniques to effectively manipulate you into buying…. sound far-fetched? We’ve already got personalised OIympic summaries coming up this summer – tie in a system like Hume and your buying/browsing history in there and it’s not too far off… 😵💫

Anthropic strikes again – Claude’s new Projects feature

Disclaimer: I ❤️ Claude. I love the work that Anthropic, the team behind Claude, have put into Constitutional models (think Asimov’s 3 laws of robotics):

The alignment problem is an enormous one and it needs inherent flexibility – maybe this is the path to that. I particuarly love Claude for writing – its been my go-to AI for that since it first came out because it’s massive context window (effectively it’s working memory and at 200k tokens, about 500 pages of text) is a game-changer, I prefer the way it writes, the way it thinks – the lot. This affection only deepened when Claude 3 Opus came out and it got smart – and smarter yet with the recent release of 3.5 Sonnet.

But now it looks like Anthropic are coming hard for the 👑 with the release of Projects. Effectively Claude’s answer to GPTs, these look good out the box already:

This is building on an Anthropic trend of dropping awesome new features without fanfare (if you haven’t tried Claude Artifacts yet, I strongly encourage you to do so – great set of use-cases and prompts here or how about transforming a research paper into an interactive learning dashboard in under a minute?!?) and, ultimately, the best is yet to come for Claude 3.5.

Claude models come in families – Haiku, Sonnet, and Opus – and the gap between Claude 3 Sonnet and Opus was significant. I can only imagine what Claude 3.5 Opus will be like – I guess we’ll find out soon enough 🤯👀🍿

AI vs Humans: Unsettling Results from New Turing tests

People have been asking if AI-generated text can be detected ever since ChatGPT came out (spoiler: it can’t – time to move on, unfortunately). In a fascinating (and concerning) blind study, the researchers created fake student identities to submit the unedited ChatGPT answers for a series of undergraduate courses. The markers, who were not told about the project, missed 94% of the AI-generated submissions, and marked the AI scripts consistently half a band higher than humans. Incredible stuff – breakdown here and original paper here. It goes without saying that this study raises enormous questions about assessment practices, take-home assessments, and the need for new approaches to evaluating student work in HE.

This also has shades of another, related experiment. Aristotle, Cleopatra, Da Vinci, Genghis Khan, and Mozart are sitting on a train… – no, it’s not the setup to a bad joke – it’s a reverse Turing test of sorts. A human has infiltrated a group of AIs and it is one of those five historical figures! Through conversation alone, they need to figure out which of them are AIs and which is the mole human. Wild stuff and they definitely do a lot better than those researchers in the above study did… well worth a watch!

Mastering AI Prompts: A Clever Hack for Optimal Results

Darren Coxon is a great follow in the AI x Education space for a range of reasons – chief among them being practical, immediately applicable advice like the following. Don’t know how to prompt an AI for a particular task? No problem – get the AI to do it for you. From Darren:

Drop the below prompt into the AI (I find Claude does the best job), filling in the blanks:

“I want to [add the task you want to complete]. Can you write a prompt to instruct a large language model to execute this optimally.”

That’s it. Drop what it gives you into the same LLM (or a different one), check the response, then tweak.

After all, why have a dog and bark yourself?

The AI landscape continues to evolve at breakneck speed, bringing both exciting opportunities and complex challenges for HE. We’re facing the need to reassess our assessment practices in light of AI’s capabilities, while AIs are acing exams, and grappling with the ethics of emotion-manipulating AIs in our classrooms. 😵💫

The global AI regulation scene is very Wild West right now – a reminder that we need to stay on our toes and in the loop with policy as it develops. Meanwhile, incredible new tools like Claude’s new Projects feature are opening up exciting possibilities for teaching and research. 🚀

As we move forward, it’s essential that we in HE remain adaptive, critical, and proactive. How can we harness these AI advancements to enhance learning outcomes while maintaining academic integrity? How do we prepare our students for a world where AI is an integral part of professional and personal life?

These are the questions we must tackle as we continue to shape the future of HE in this new age of AI. Until next time, stay curious and keep exploring! 🤩