Hi

Firstly, apologies for the extended radio silence. I’ve been on leave visiting family and friends in the UK (and enjoying the Euros ⚽) the last couple weeks. Fantastic to see them and always a good time up in Edinburgh – love me some Scotland 😍.

Anyway, enough from me – the world of AI has been busy while I’ve been away so let’s get into it:

AI Everywhere: The Road to AGI and Your Vending Machine’s New Tricks

In the last post, as part of a conversation on Global AI Regulations, I cracked what I thought was half a joke about emotionally-intelligent vending machines knowing your name, buying history, and weak points – using this to persuade and drive sales (e.g., ”It’s so hot today! You really should have a Sprite” kinda thing). Well, it looks like Sam Altman might have been thinking similar things.

GPT4o mini is significantly smarter and more than 60% cheaper than GPT3.5 Turbo (which, while seemingly ordinary now, was amazing about a year ago). This substantial reduction in costs is a continuation of an incredible 99% drop in cost per token since 2022. OpenAI “envision a future where models become seamlessly integrated in every app and on every website” – so maybe not the vending machines just yet, but it’s coming.

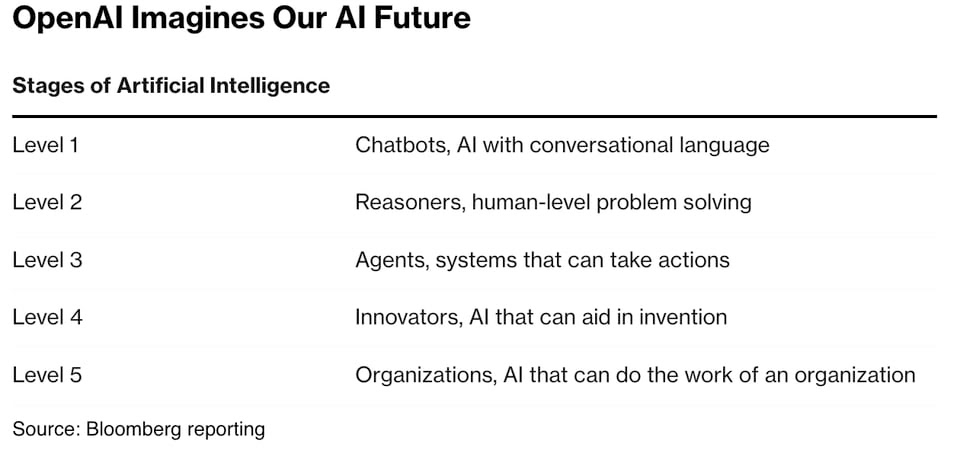

At the other end of the scale, OpenAI has come up with a five level system for tracking progress to Artificial General Intelligence/AGI (i.e., AI systems that are generally smarter than humans – the company’s ultimate goal).

Bit underwhelming given that last line is potentially the final step before we get to AIs like Ultron, Skynet, or Hal-9000 (depending on your generation – and hopefully all better-adjusted than those 3)

Current systems operate at level 1 but reportedly OpenAI are “on the cusp” of achieving level 2 – after that? Who knows – the field is divided with Sam Altman speaking to AGI arriving in the next four or five years while Yann LeCun (Meta) thinks it’s still quite a bit further away. Either way, these are truly insane times when are we having a serious discussion about when (not if) we will have AIs like those behind C3PO, WALL-E, or Optimus Prime in the world. 🤯

Designing AI for Education: A Developer’s Roadmap to Responsible Innovation

I didn’t even know there was such a thing as the US Office of Educational Technology but I’m definitely going to be paying more attention there now given the launch of their “Designing for Education with AI: An Essential Guide for Developers“. An overdue publication and potential gamechanger, it emphasises shared responsibility between AI developers and educators, stating, “it is unreasonable to ask educators to be primary reviewers of the data and methods used to develop AI models and related software” (p. 7).

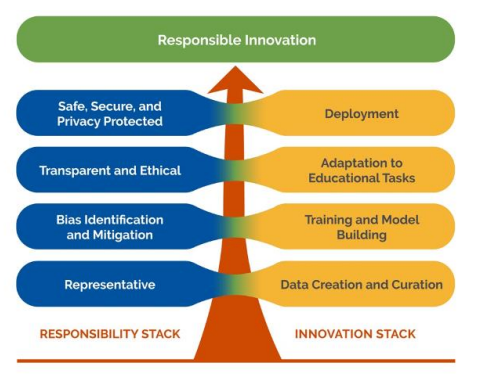

The guide proposes a dual stack approach: an Innovation Stack for technological advancements and a Responsibility Stack for ethical implementation (see pic). This framework encourages developers to create AI solutions that push boundaries while prioritising well-being, allowing educators to focus on facilitating learning and assessment. #likeit

The dual stack – innovation balanced by responsibility

Karpathy’s AI School: Reimagining Education in the Age of Artificial Intelligence

Not a huge fan of techbros positioning themselves and their particular brand of AI being the silver bullet that will “fix education” but this particular techbro might be a bit different. Andrej Karpathy has the AI credentials (OpenAI cofounder, AI Director at Tesla, Stanford lecturer) and he is hands-down one of the best science communicators in the AI game – his Introduction to Large Language Models is well worth a watch.

Anyway, he’s opening a school: Eureka Labs -”a new kind of school that is AI native” with a potentially very interesting new model of teaching – a “Teacher + AI symbiosis”.

While the idea of AI-assisted learning is intriguing, I wonder how it would impact the social aspects of education? 🤷 Either way, it’s important to remember that we are in the very first stages of this revolution – Cassie Kozyrkov (ex-Google) points out that we’re not living through an AI revolution but a UX revolution. ChatGPT and the chatbots we’re using now are just the beginning – who knows what might be coming next but Eureka Labs (and things like it) are definitely going to be worth keeping an eye out for.

AI vs. Gym Gains: Rethinking Assessment in the ChatGPT Era

“We used to consider writing an indication of time and effort spent on a task. That isn’t true anymore” (Setting time on fire and the temptation of The Button – great point, title, and piece by the inimitable Ethan Mollick ). With HE assessments, we’re now at a critical juncture where the gap between the work students need to do and the corners they can cut has shrunk dramatically.

In their new piece titled Stop looking for evidence of cheating with AI and start looking for evidence of learning Cath Ellis and Jason M. Lodge propose a compelling metaphor: learning is like going to the gym – if you don’t put in the work, you don’t get the results #AIiskillingyourgains. But how do we ensure students are truly ‘working out’ their minds in an era where AI can produce sophisticated essays at the click of a button?

The answer, according to Zaphir et al. (2024), lies in a systematic approach to assessment design. Their MAGE framework – Mapping, AI vulnerability testing, Grading, and Evaluation – offers educators a structured method to analyse and improve their assessment tasks. This framework aligns perfectly with Ellis and Lodge’s call to focus on the process of learning rather than just the final product. But how does this work in practice? Sean McMinn‘s recent experiment/reflection, Practical Insights from the MAGE framework provides a fascinating real-world application. By combining the MAGE framework with AI tools like ChatGPT, McMinn redesigned assessments for an AI literacy course, focusing on higher-order cognitive skills and creating rubrics that are less vulnerable to AI manipulation.

The key takeaway from these collective insights? The focus should shift from creating “AI-proof” assessments to designing tasks that foster critical thinking, creativity, and real-world application. This might include more in-person demonstrations of learning. By using AI to design assessments while acknowledging its limitations, we can create authentic learning environments. The future of education isn’t about resisting AI, but leveraging it to enhance teaching and assessment. Remember Ellis and Lodge’s gym analogy: real educational gains come from effort, not just appearances. 💪

Video Revolution: AI’s Latest Leap into Visual Storytelling

AI video just continues to get better and better (and better). You will probably have seen video from Luma AI ‘s Dream Machine around a lot recently – but they just keep improving it, recently adding a new keyframe feature that lets users select the first and last slide of their video and so giving them much greater control. Very cool. OpenAI’s Sora is still not publicly available with the company citing safety testing – probably not a bad thing when you see videos like this one of a Reservoir Dogs-style Obama with a gun as well as famous landmarks from around the world seemingly on fire.

That said, they keep building up the hype with intriguing releases from partnerships with VFX pros, designers, and now architects with Tim Fu (ex-Zaha Hadid)’s Sora Showcase. Imagine that – a single sentence leading to rich, detailed video explainers of concepts, processes – you name it! And that before you turn it into an AR/VR experience that students can then explore… 🤯

Finally, Runway-3 is available in beta (so you can play with it) and the output is just spectacular – worth a watch!

As AI continues to reshape the landscape of HR, we find ourselves at a crossroads of innovation and responsibility. From the potential ubiquity of AI in everyday objects to the reimagining of educational paradigms, the challenges and opportunities are immense. As educators and leaders in HE, we need to embrace these changes while ensuring that our core mission – fostering genuine learning and critical thinking – remains intact.

What’s your next step in this AI-driven educational revolution? Will you redesign your assessments to focus on ‘mental workouts’ that AI can’t shortcut? Or perhaps explore how AI tools can enhance your teaching methods? I’d love to hear people’s thoughts – best way we can learn is by sharing together.