Hi

Hope you had excellent weekends one and all! Winter is still hanging around here in Hanoi so it was grey, wet, and cold in pretty even measure. Not the funnest for now but I’m sure I’ll look back fondly in summer when the RealFeel starts pushing into the 40°s C/100° F 🥵. A few notes from the world of AI to start off your weeks:

2024: the Year of Open (Source) AI?

So 2023 was unquestionably OpenAI (and Microsoft)’s year. Even with the Succession/Game of Thrones drama they put us through towards the end of November last year, to paraphrase George Siemens, they “sucked most of the oxygen out the room”. So much so that other likely players only started emerging around the fringes (e.g., Midjourney, Runway, Anthropic), towards the end (e.g., Google), or not really at all (e.g., Apple and Amazon).

But that seems to be changing – with exciting new open source AIs aiming to challenge Big Tech and democratise access to AI-powered language capabilities. AIs like Meta’s Llama or Mistral’s Mixtral offer exciting new possibilities – especially combined with the blinding speed of new architectures like Groq (see below).

“Are you making the most of AI, or just skimming the surface?” (Allie K Miller)

Great provocation. And the source, Allie K Miller (ex-Amazon and IBM) is an absolute AI rockstar and dropper-of-gems – so I’ll paraphrase the setup and get out of her way: Most people will ask AI to summarise emails, documents, or transcripts and then stop there.

“The top AI users will think about how it can improve their entire workflow, even with its imperfections, and move away from doing everything from scratch to INSTEAD being a creative process manager, critical thinker, and reviewer. Challenge yourself to augment more of your process, not just step 1.”

“I think senior leadership is happily failing universities today” (George Siemens)

Speaking of provocateurs, it looks like George Siemens (Global Research Alliance for AI in Learning and Education and the University of South Australia) has no problems picking fights in the AI space with statements like the above. In one of Monash University’s fantastic 10 Minute Chats on Generative AI, Siemens argues AI is not being taken seriously enough by HE – particularly given AI operates in our core business: human cognition. He sees reports being published, committees being formed, and assessments tweaked with hands then largely being washed of the issue, without attention being paid to the fundamental questions – things like: “in what way does this co-intelligence that will exist for us or with us as we go forward as a society, how does that change what we teach and how do we do the actual teaching?”

On that, I’m stealing the phrase co-intelligence – I’ve used “bottled intelligence”, “bottled thought”, etc. but that’s a much better frame that captures what these new technologies are capable of (he says with his ever-present co-author Claude 2 and new hire Gemini Ultra nodding quietly in the background). It also touches on things I’ve been struggling with with the whole “stochastic parrot” dismissal of AI (i.e., AI doesn’t think or understand – it just “seems to”) – more on that below.

New AI for educators resources – Microsoft

Microsoft have released a new 3-hour learning path that “explores the potential of AI in education”. From a brief history of LLMs, to prompt engineering and responsible use of AI, it then addresses uses in a classroom setting. With links back to the International Society for Technology in Education and UNESCO frameworks, it’s probably well worth a look.

Justa-ism and the Problem of Human Specialness in the Age of AI (Scott Aronson)

I normally try to end on something light, fun, or something that has that wow factor. But that “co-intelligence” phrase? It’s stuck with me – because I think it articulates something most people still don’t get about this whole space – just how powerful these things are and how much we might be missing in dismissing with “just a” statements – it’s just a big autocomplete, just a stochastic parrots, etc.

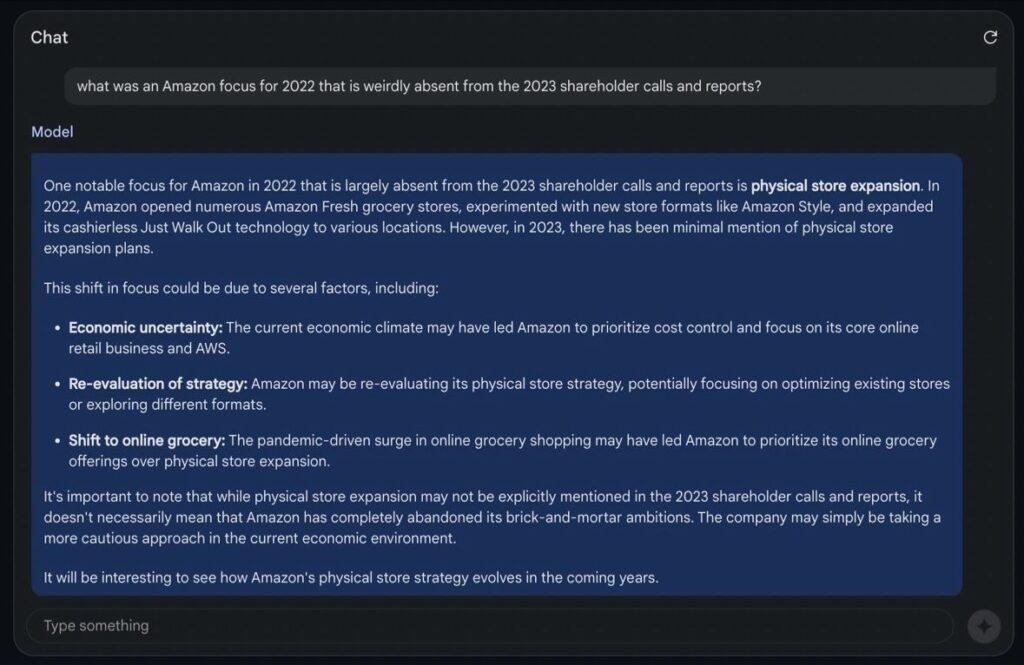

Case in point: in an interesting test of capabilities, Allie K Miller fed Gemini 1.5 Pro (a soon-to-be free version of the tool) 2 years of Amazon shareholder reports and earnings call transcripts. She then asked “What was an Amazon focus for 2022 that is weirdly absent from the 2023 shareholder calls and reports?”.

Gemini vs the Big Four

You can see the answer above but, long story short, I have consultant friends who make lots of money doing work just like that. That’s hours of work for people who, until late 2022, were peak economic competitors – done in less than 5 minutes.

In doing this extraordinary thing, did the AI ever think – or did it just “seem to”? Whichever is true, does it matter if the results are there? OpenAI researcher Scott Aronson relates a conversation where someone made the case “GPT doesn’t interpret sentences, it only seems-to-interpret them. It doesn’t learn, it only seems-to-learn. It doesn’t judge moral questions, it only seems-to-judge”. His reply? “That’s great, and it won’t change civilisation, it’ll only seem-to-change it!”

What do people think? What does the idea of ‘co-intelligence’ with AI mean for your work/field of study/life?

We hope this edition of the newsletter has been of interest to you. If you’re new here and it’s been useful, please do click subscribe and you can expect a weekly update every Monday from now on. If you’re already a subscriber – thanks for your ongoing interest and support! Either way, if you know others who might benefit from reading this weekly, please forward it on to them too.

Have a great week ahead – and do please let us know what you think of the idea of ‘co-intelligence’ in the comments! I’d love to hear your thoughts.

Things I didn’t know I needed to see but am happy I have because of AI #3149 – Lego Jules Winnfield eating a burger (Midjourney)