Hi

Hope you all had wonderful weekends. Spent a big chunk of mine on me roof BBQing with good friends, great food, and amazing weather. Anyway – enough food talk – a few words from the world of AI to kickstart your weeks:

Your Digital Echo: ChatGPT Mimics Your Voice

Imagine talking to ChatGPT, getting through some work – maybe doing some shopping together, and it starts talking back to you – in your own voice. #burnitwithfire 😵

Innocuously labelled “unauthorised voice generation” this is a bug discovered in testing Advanced Voice Mode (available to some advance users and coming …soon? to everyone else) – apparently it can mimic any voice from just a short clip. BuzzFeed data scientist Max Woolf nailed it, tweeting “OpenAI just leaked the plot of Black Mirror’s next season”. OpenAI say they have adequate mitigations in place as outlined in their GPT-4o System Card which details model limitations and safety resting procedures. Either way, bye bye voice-based authentication systems 👋

AI Crystal Ball: Predicting Human Behaviour in Social Science

Imagine an AI that can predict human behaviour with uncanny accuracy. It’s not science fiction—it’s happening now. Researchers from NYU and Stanford have demonstrated that Large Language Models (LLMs) can forecast social science experiment results with striking precision.

Key findings:

- AI predictions strongly correlated with actual outcomes (r = 0.85)

- Equally accurate for unpublished studies outside training data

- Sometimes outperformed expert forecasts

Potential game-changers:

✅ Quick, cost-effective pilot studies

✅ More accurate effect size estimates

✅ Identification of promising research directions

But it’s not all rosy. This breakthrough raises ethical concerns:

❓ Could AI perpetuate existing biases and stereotypes in research?

❓ What does this mean for the replication crisis in social sciences?

❓ How do we balance AI efficiency with the value of real-world behavioural experiments?

As we stand at this crossroads of AI and social science, one thing is clear: the landscape of research methodology is about to undergo a seismic shift. Are we ready for it?

AI Detection: 99.9% Accurate or Flawed Promise?

So maybe you can detect AI-generated text? It turns out OpenAI have been sitting on a text detector that they claim is 99.9% accurate for a couple years now. While this sounds like wonderful news for the many people concerned with the rampant contract and AI-assisted cheating going on at Australian universities who have been asking for one of these for … well over a year now, this confident assertion has a few immediate, obvious problems. For one, can it detect AI text washed through another AI – esp. one with voice/writing samples on which to base the paraphrase? Or, more importantly, what exactly does that impressive number mean?

Cue Phillip Dawson: is it the amount of AI detected? Is it a rate of false positives? Or is it an overall accuracy figure incorporating true positives and false positives? And, if it is some combination figure, what was the proportion of AI use in the dataset used to produce these figures? Why is this a problem? We might be ok with a system that correctly identifies 50% of AI use if it only has a 0.0000001% false positive rate. We might not be ok with system that correctly identifies AI use 99.999% of the time but has a 5% false positive rate.

Dead on the money – and given research shows that AI Detectors are biased against English as a Second Language writers, all the more reason to be cautious. Otherwise, while I understand the appeal of a magic AI fix, one thing that I’ve been enjoying enormously in this whole space has been the exciting possibilities inherent in this space – programmatic assessment, assessing process > product, etc. Even if we suddenly found out we could detect AI, moving away from those would definitely feel like a backward step.

Tackling AI in Academia: Immediate Actions

More on AI x academic integrity – Jason Lodge (University of Queensland)’s recent TEQSA report offers a pragmatic approach to the AI challenge in higher education. With AI use estimates ranging from 10% to 60% of student cohorts, institutions need immediate action.

Key recommendations include:

- Boost AI awareness among staff

- Prioritise high-stakes assessments

- Develop student partnerships for ethical AI use

- Implement transparent AI policies

- Consider innovative assessment methods (e.g., oral exams)

Lodge emphasises shifting focus from cheating detection to learning outcome verification, aligning with the Higher Education Standards Framework. While not a silver bullet, these tactics bridge the gap between current practices and the systemic changes outlined in last year’s “Assessment Reform for the Age of Artificial Intelligence” guidelines.

The Deepfake Dilemma: When Seeing Isn’t Believing

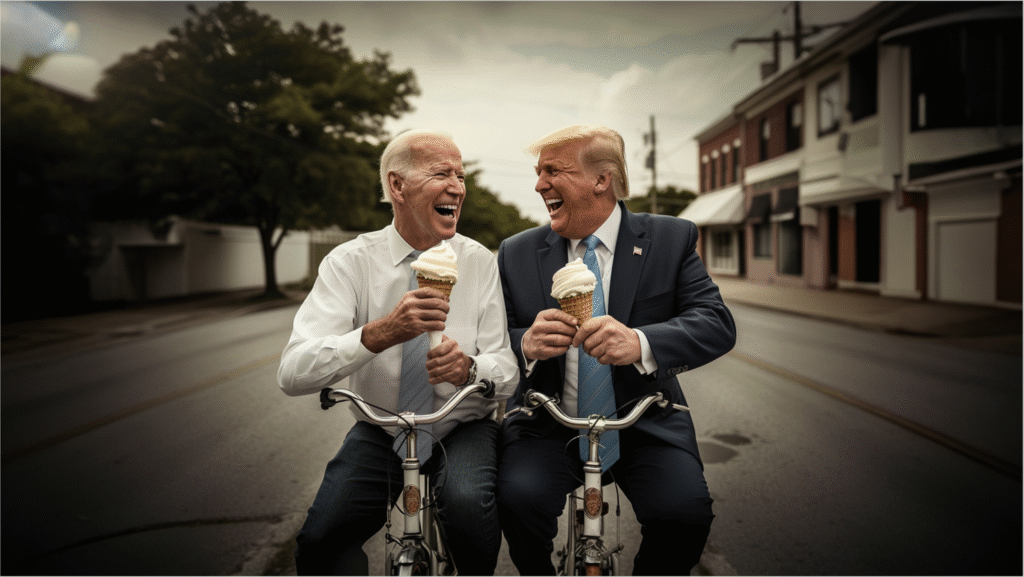

A while back, I shared this picture of two best buds out enjoying a ride on their bikes with ice-creams. Good clean, family fun.

#bestbuds

Very easy to do even then with Midjourney but video was still a bit behind. That is changing – right now – and fast. Case in point, check out this incredible video of an extremely life-like TED talk speaker from the ever-inspiring Nicolas Neubert – the guy who made the incredible Genesis trailer over a year ago now.

Take that, or some of the incredibly lifelike images coming out of models like Flux (banner image above), and we’re in for some very interesting times ahead.

As Bilawal Sidhu, host of the well-worth watching TED AI Show aptly puts it: “Faced with a daily barrage of algorithmic content, you’ll need to be a forensic or VFX expert to notice the discrepancies. The average internet user ain’t ready for this new world.“

This leap in visual AI raises pressing questions:

- How will we discern truth from fabrication in a world of perfect fakes?

- What are the implications for media literacy and public trust?

- How can educators prepare students for this new visual landscape?

As AI continues to push the boundaries of what’s possible, the need for critical thinking and digital literacy has never been more crucial.

We hope this edition of the newsletter has been of interest to you. If you’re new here and it’s been useful, please do click subscribe and you can expect a weekly update every Monday from now on. If you’re already a subscriber – thanks for your ongoing interest and support! Either way, if you know others who might benefit from reading this weekly, please forward it on to them too.

Have a great week ahead and let us know if there’s anything we’re missing that we should add to make this newsletter more useful for i) yourself and/or ii) others. This is a fast-moving, ever-evolving space and we greatly value any and all feedback. 🙏