Hi

Hope you had great weekends one and all. Mine was spent decompressing after a busy work trip down to Saigon (and the breakneck rollercoaster pace global news is keeping at the moment) with some chilled dog walks, a fantastic massage, and an even better Sunday roast #nicechangeofpace.

Anyway, enough weekend chat – here’s a few notes from the world of AI to kickstart your Mondays:

Meta’s Power Play: Llama 3.1 Unleashed

Big move by Meta/Zuckerberg: they have just released Llama 3.1 405B – an open source AI on par with the best of the closed source ones. With a techworld who’s-who of partners in this enterprise including AWS, NVIDIA, Groq, Google, Microsoft, Snowflake, and more backing it, clearly the Zuck is making power moves beyond the world of BJJ/MMA.

“The thing I’m most excited about is seeing people use it to distill and fine-tune their own models… By our estimates, it’s going to be 50% cheaper, I think, than GPT-4 to do inference directly on the 405B model” (Zuckerberg). Wild stuff – with obvious implications relating back to points we’ve made recently re. the ubiquity of AI in the near future (think self-deprecating but oddly persuasive, personalised ads).

So what does this mean? People can get busy with their own versions of an AI with all the privacy and control upsides that offers. The models are big now but definitely keep an eye on this – given Llama 3.1 is open source, there’ll be someone somewhere tweaking it down to run on a clock radio or a calculator sometime soon. Wild stuff – but it comes with a hefty pricetag. There are suggestions that AI investment could hit as much as $1tn in the next few years and real questions are being asked about the return on that investment in AI vs say… a laundry list of about a dozen different things humanity could be focussing on.

On that, a fascinating share is the accompanying research paper – 100 pages of knowledge-sharing the like of which we don’t see often in this highly competitive space. Thomas Wolf (Cofounder and CSO at Hugging Face) responded to this generous share saying, “Mind-blown! Maybe the single paper you can read today to join the field of LLM from zero right to the frontier”.

Policy Moves: From France to NSW

It’s been a while since last we spoke about government policy with other updates being largely restricted to refinements like these wonderful visualisations and reference materials on responsibilities arising from the AI Act.

It seems like the French might have enough of that with calls for concrete action i.e.,”offering access to computer, access to data, training, and not theoretical training, but training into how does that work and what you want to do in your country“. The February 2025 summit should be an interesting one.

Closer to home for some of us, the NSW government have released a report on AI in NSW. Breakdown of a range of salient points here but the one that jumps off the page for me is resourcing – i.e., create a NSW Chief AI Officer and Office of AI. 👏

The Digital Worker Dilemma: Backlash and Concerns

Spare a thought for our new graduates – what a wild time to be coming into the workplace! 😵💫 Worldwide, faculty and leadership are struggling with how they can ensure their content, the skills they teach will remain relevant in a world with AI. A great new study from Inside Higher Ed shows that 70% of graduates think generative AI should be incorporated into courses. More than half said they felt unprepared for the workforce.

3/4 of graduates believe this training “should be integrated into courses to prepare students for the workplace” with 69% saying “they need more training on how to work alongside new technologies in their current roles”. And that’s before you consider things the emerging agentic AI “employees” like Devin the software engineer and his new colleagues Einstein the service agent (Salesforce), Piper the sales agent, and Harvey the lawyer. As markers of what’s to come, not gonna lie – those freak me out a bit. That said, it’s wonderful to see some push back by flesh-and-blood employees like that served by those at Lattice a global HR and performance management platform.

A couple weeks back, in a spectacularly tone-deaf announcement, the Lattice CEO announced the company was making history, “We will be the first to give digital workers official employee records in Lattice. Digital workers will be securely onboarded, trained and assigned goals, performance metrics, appropriate systems access and even a manager. Just as any person would be.” The backlash was immediate with the initiative lasting just 3 days before it was suddenly suspended.

Great, pithy summary here:

“Terrifying. The more AI is being used all around, the more I am starting to be like this shit is going to ruin everything. Workers are already struggling enough and now they have to compete with ‘AI workers’[.] Can we put it back into its box and send it back?”

AI Education Arsenal: Top Resources for Educators

It’s been a while since we’ve done a good educational AI resources roundup – so let’s solve that sharpish:

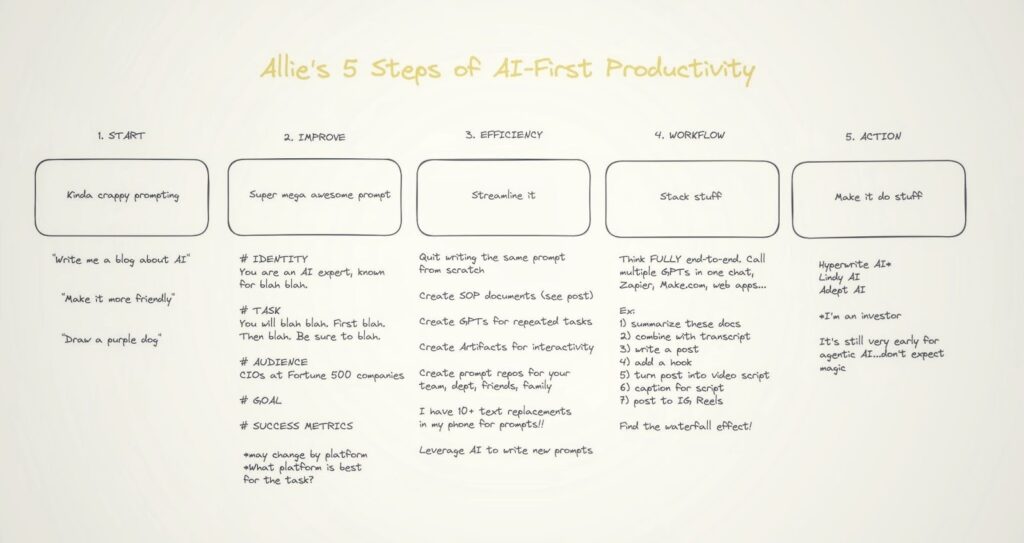

- Allie K Miller is a beast and the woman behind the “AI-first productivity” mindset (see pic). Allie’s How to use AI to 10x your productivity lightning class is fantastic so, given that, definitely check out her LinkedIn Live event this Wednesday evening where she and Conor Grennan (Chief AI Architect, NYU Stern) will “be tackling our favorite prompting techniques and practical strategies to boost your AI proficiency” as part of a launch event for their new course; and

- Harvard has a fantastic resource base that is well worth tapping in to with a wide range of different resources from “AI Text Generators and Teaching Writing: Starting Points for Inquiry” (Anna Mills – an AI OG); to “Syllabi Policies for AI Generative Tools” covering a range of different countries; to explainers like “Large language models, explained with a minimum of math and jargon” for people trying to understand the underlying tech without getting bogged down in technical details.

Not gonna lie, I move between 2 and 3 mostly but am actively working to move into 4. Respect to those who’ve made it there already 🤩

Cautionary Tales: When edTech Dreams Crash

Imagine this:

- March: Your company is featured in Time Magazine’s list of World’s Top EdTech Companies in 2024, signing eight-figure USD contracts and running splashy public launch events with the second-largest school district in the US. All of this thanks to the wonderful “Ed”, an AI “personal assistant that could point each student to tailored resources and assignments and playfully nudge and encourage them to keep going” – and in 100 languages no less!

- July: Your staff are furloughed, the CEO is gone, requests for interview go unanswered, industry experts say the quotes you pitched were a mere fraction (as little as 10%) what you’d need to get the job done, and other companies are circling for your company’s IP. And Ed? Sorry to tell you this but Ed’s effectively dead.

Shades of Icarus but it’s a great lesson on treating AI as a silver bullet/magic cure-all. At this point it seems like the student data entrusted to the company is safe but who knows next time? On that, great opportunity to remind people to do their due diligence with any AI you use – most default to absorbing any and all data it can (tho you can turn this off if you want/know how). Caveat emptor.

This cautionary tale serves as a stark reminder for HE institutions and educators. While AI promises exciting possibilities, it’s crucial to approach its implementation with realism and due diligence. Institutions should:

- Thoroughly vet AI solutions and their providers before large-scale adoption

- Ensure robust data protection measures are in place to safeguard student information

- Develop contingency plans for potential AI project failures

- Focus on sustainable, long-term AI integration rather than chasing flashy, short-term solutions

Remember, AI should augment and enhance education, not replace critical human elements of learning and teaching. As we move forward, balancing innovation with responsibility will be key to successful AI integration in HE.

—

As we work through this rapidly evolving AI-enhanced world, it’s crucial that we remain both excited about the possibilities and cautious about the challenges. From open-source models to digital workers, from policy shifts to educational resources, the impact of AI on HE is profound and multifaceted.

What’s your take on these developments? Are you experimenting with open-source AI models like Llama 3.1? How are you preparing students for an AI-integrated workplace? Share your thoughts, experiences, or concerns in the comments below. Let’s learn from each other as we shape the future of education in this AI-driven world.